Fullsphere Irradiance Factorization for Real-time All-frequency Illumination for Dynamic Scenes

Authors: Despina Michael and Yiorgos Chrysanthou

Journal publication: Computer Graphics Forum, Volume 29, Issue 8, pages 2516–2529, December 2010.

Keywords: Real-time rendering, All-frequency illumination

Download the full paper here.

Abstract

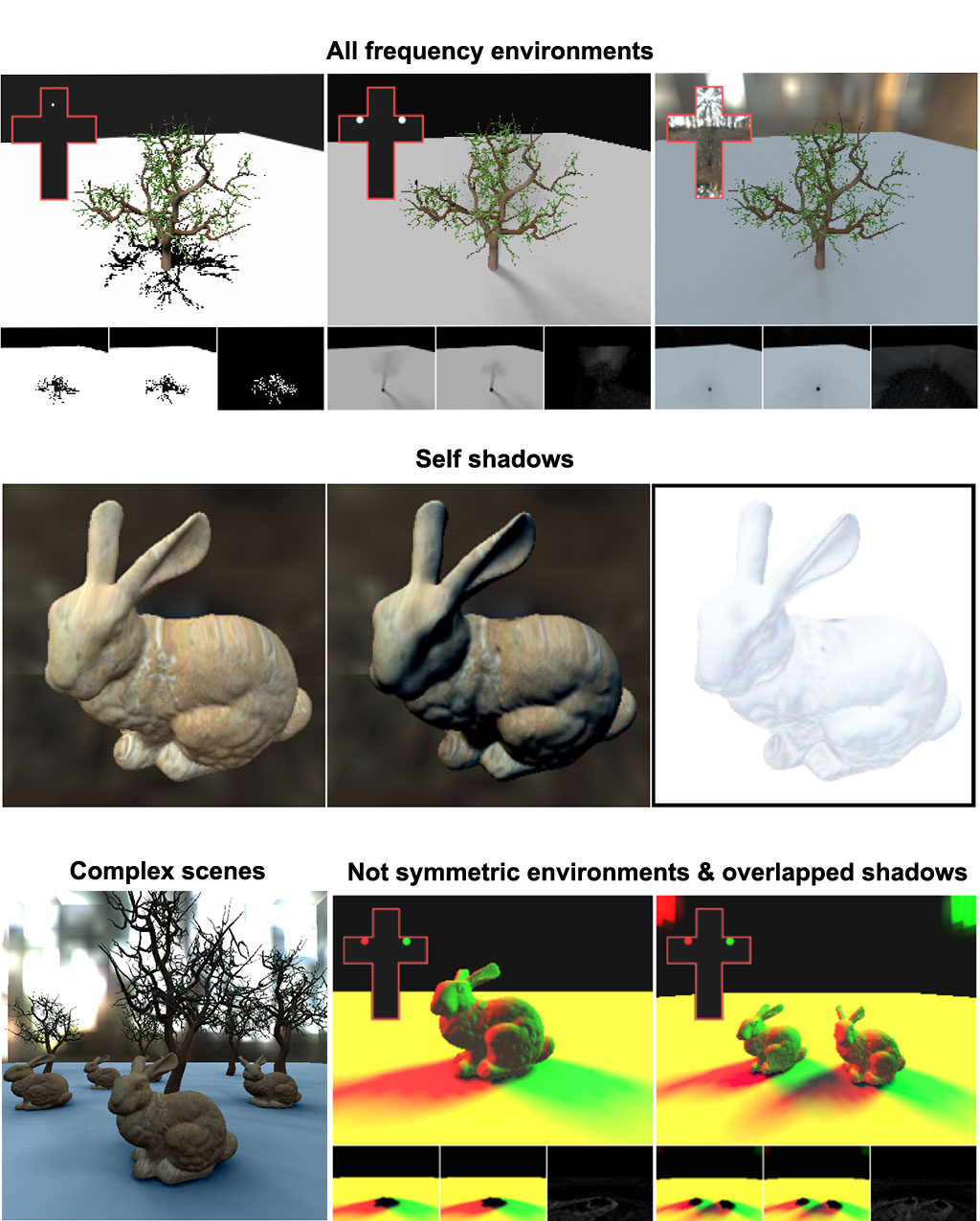

Computation of illumination with soft shadows from all frequency environment maps, is a computational expensive process. Use of precomputations add the limitation that receiver’s geometry must be known in advance, since Irradiance computation takes into account the receiver’s normal direction. We propose a method that allows the accumulation of the contribution to the Irradiance, on a possible receiver, from all light sources in the scene, without knowing the receiver’s geometry. This expensive computation is done at the preprocessing. The precomputed value is used at run time to compute the Irradiance arriving at any receiver with known direction. We show how using this technique we compute soft shadows and self shadows in real-time from all-frequency environments, with only modest memory requirements. A GPU implementation of the method, yields high frame rates even for complex scenes with dozens of dynamic occluders and receivers.

Contributions & Strengths of our method

- The main contribution of our technique is the factorization of a new notion that we introduce, the Fullsphere Irradiance. The factorization allows us to precompute and store in only a 3D color vector, the contribution to illumination from an arbitrary number of light sources on a reference base system, without requiring the receiver’s normal in advance. The precomputed values can be transformed, in a very fast way (using only a dot product), to the Irradiance arriving from the light sources, once the receiver and it’s normal is known.

- The proposed method can compute diffuse illumination with soft shadows for fully dynamic receivers, moving occluders and all frequency environment maps at real-time frame rates.

- Frame rate and memory requirements are independent of the number of light sources and the number of vertices of the occluders. Frame rate depends only on the number of occluders in the scene while the memory requirements depends on the number of sample points used and the different type of objects in the scene.

- The size and the complexity of the occluders do not affect the computations for illumination at run time.

- Our technique requires only moderate memory and relative fast precomputations.

- Our method can run real-time for very complex scenes, with low measured error.

Results